As a long-time CTO and now CEO of Capella, a data management company, I have seen firsthand how manufacturing companies can use AI and machine learning to get valuable insights from their production data. When factories look at real-time data from sensors and equipment, they can find ways to make things better, faster, and more often.

In this post, I will discuss how AI and real-time production analytics can benefit manufacturers of all sizes. I'll touch on the following:

- The importance of real-time data in manufacturing

- What types of analytics capabilities can be enabled with AI

- Where to start with AI and real-time production analytics

- Real-world examples and use cases

The Need for Real-time Data in Manufacturing

In the past, manufacturers had limited visibility into real-time performance. Report analysis delivered insights, but they were typically based on days-old historical data. By then, it was too late to resolve production issues in near real-time.

Today, with the advent of Industrial Internet of Things (IIoT) sensors across the factory floor, manufacturers can capture vast volumes of data in real-time. These sensors monitor temperature, cycle times, pressure, vibration, humidity, and more. However, to gain value from this deluge of data, it must be properly managed and analyzed.

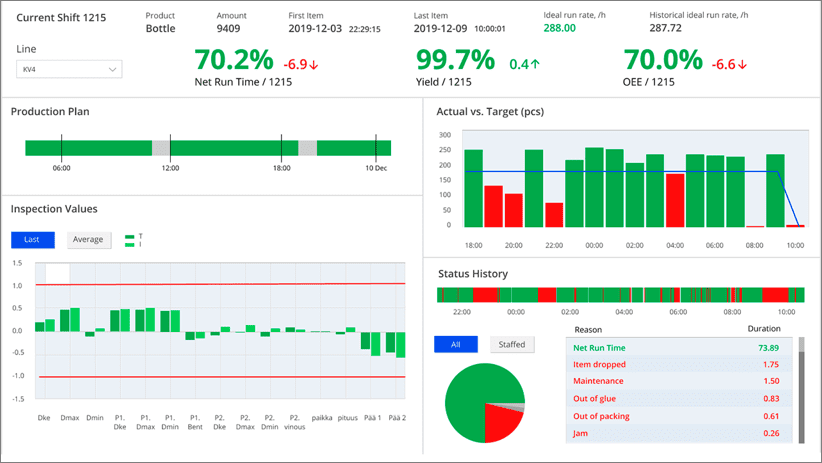

Manufacturers need intelligent systems that can consume and contextualize sensor data as it flows off the factory floor. Using time-series databases and real-time processing, manufacturers can achieve:

- Fast detection of production anomalies - identify parts that fall out of quality tolerances

- Rapid root cause analysis - pinpoint whether a sensor, asset, or environmental condition contributed to defects

- Corrective actions to minimize scrap - tune processes before large batches are impacted

These capabilities allow manufacturers to course-correct issues through automated feedback loops. The outcome is maximized yield, quality, and productivity.

AI and Machine Learning for Manufacturing Analytics

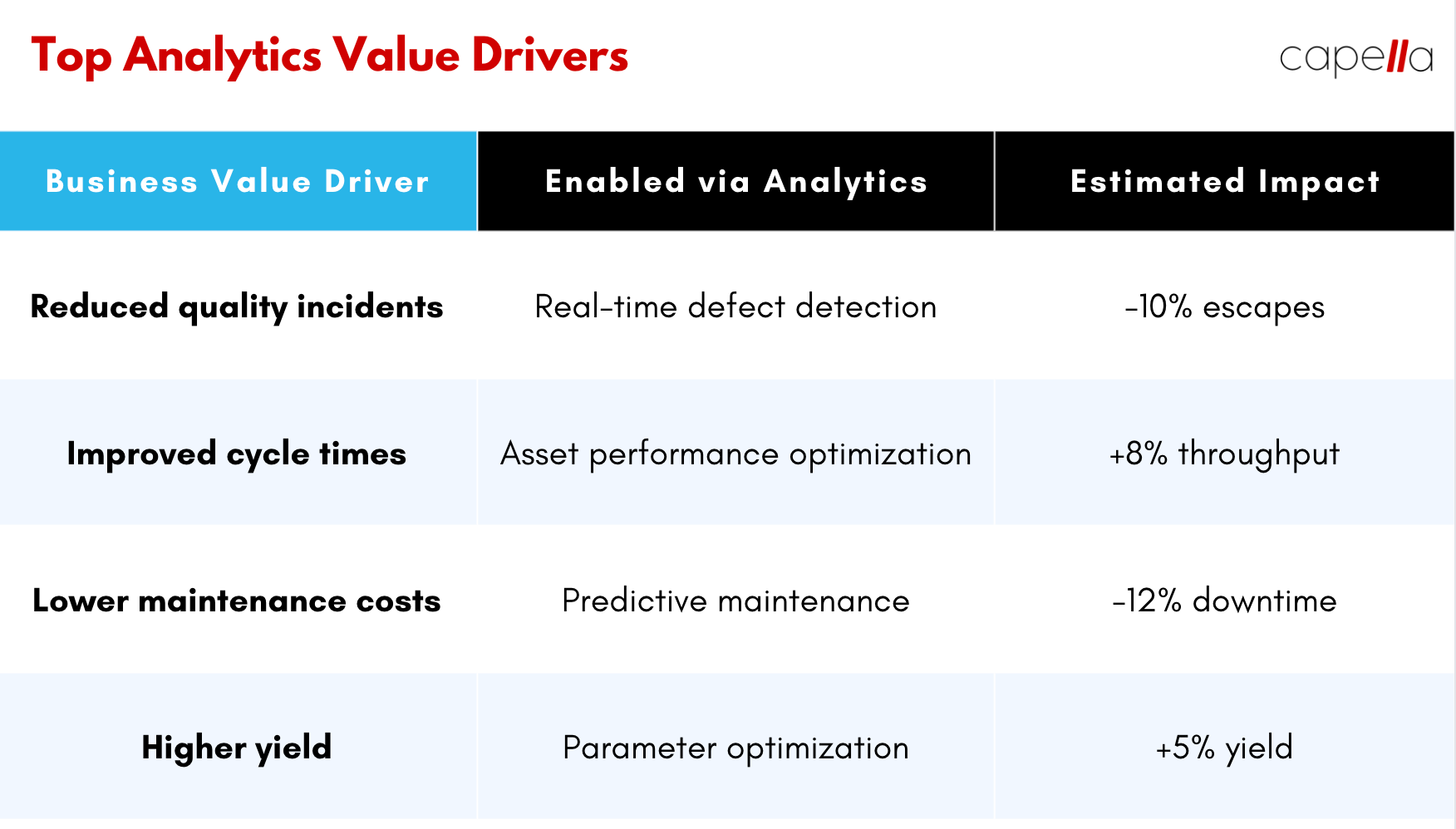

With real-time production data available, manufacturers can apply techniques like machine learning and AI to uncover new insights:

Predictive maintenance

By analyzing real-time vibration, temperature, and other sensor data from equipment, AI algorithms can determine when assets may be prone to failure. This allows for proactive maintenance prior to breakdowns.

Yield optimization

AI can combine data on equipment performance, quality measurements, and environmental conditions to determine ideal parameters for maximizing yield and minimizing defects. These parameters can then update dynamically based on real-time data.

Intelligent defect detection

As products move down the assembly line, AI-powered vision systems can analyze features to identify subtle defects - often imperceptible to humans. This allows quicker corrections to minimize quality issues.

Anomaly detection

Machine learning algorithms can baseline “normal” patterns in time-series sensor data. By comparing real-time data to these baseline models, the algorithms can detect anomalies indicative of potential problems. Human domain experts can then investigate the root causes.

Automated root cause analysis

Using the real-time sequences of what changed on the factory floor prior to a defect, AI techniques can analyze and pinpoint the most likely root causes - whether it stems from equipment failure, environmental fluctuations, input materials, etc.

These techniques allow manufacturers to reduce unplanned downtime, minimize scrap and rework, improve engineering and maintenance practices, and optimize operations for maximum throughput.

Getting Started with AI and Real-time Production Analytics

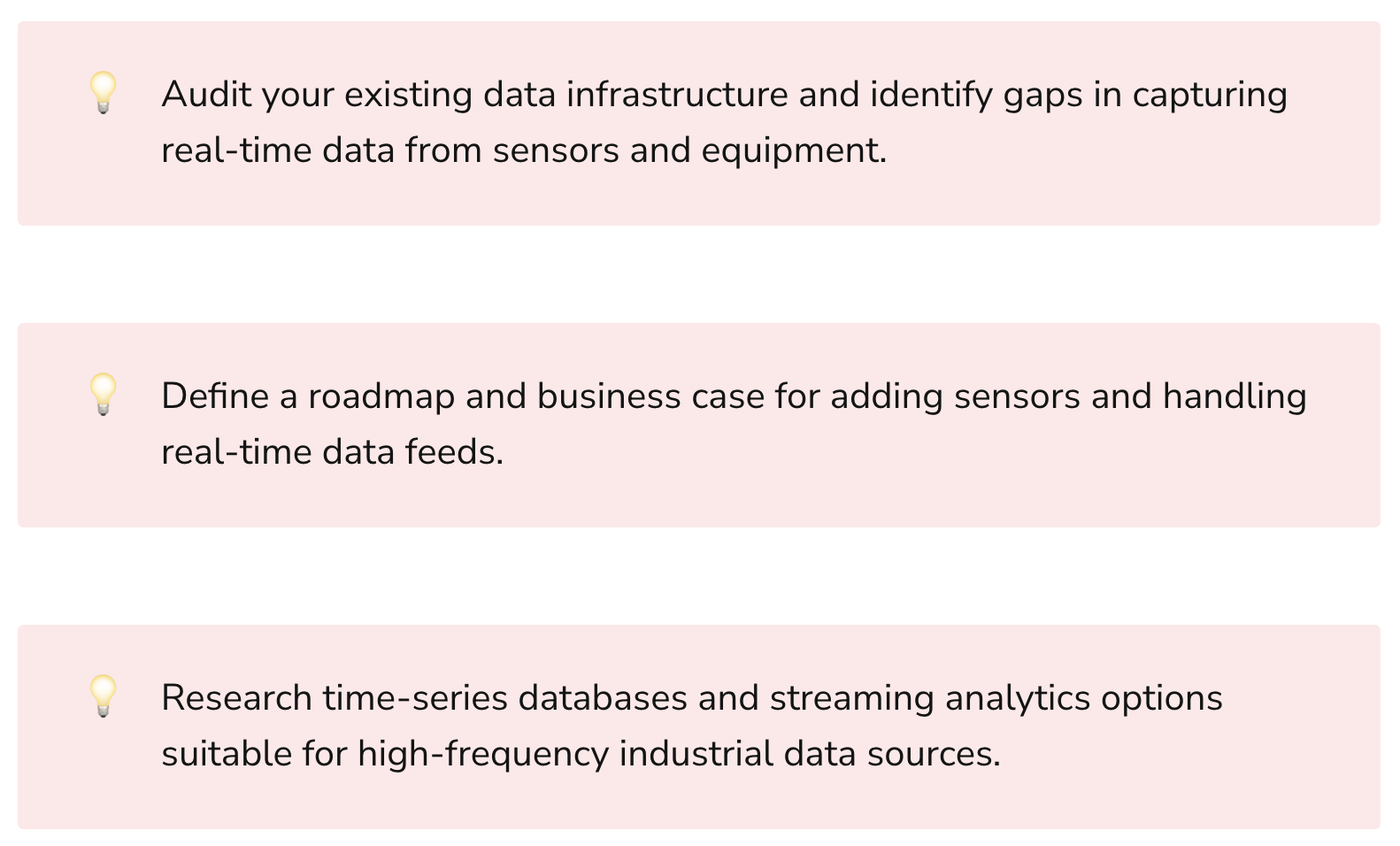

Implementing AI-based manufacturing analytics using real-time production data may seem complex, but getting started is within reach for manufacturers of all sizes and data maturity levels. Here are some best practices:

Start small, focused, and iterative - Many manufacturers have vast volumes of historically siloed data spanning systems and factories worldwide. Rather than boil the ocean, start with a targeted pilot focused on a single process, product family, or factory. Learnings from the initial implementation can inform expansion into other processes and sites.

Prioritize data pipelines and management - Real-time analytics are only possible if sensor data reliably flows into storage systems for processing. Devote sufficient resources to setting up scalable, high throughput data pipelines from equipment all the way to cloud data lakes and warehouses. Leverage containerization and orchestration platforms to ease data pipeline management across factory locations and hybrid cloud infrastructure.

Apply out-of-box AI models first - Many cloud analytics providers offer pre-trained machine learning models for common manufacturing use cases like predictive maintenance, anomaly detection, automated root cause analysis and more. Leverage these to accelerate time-to-value before investing in custom models.

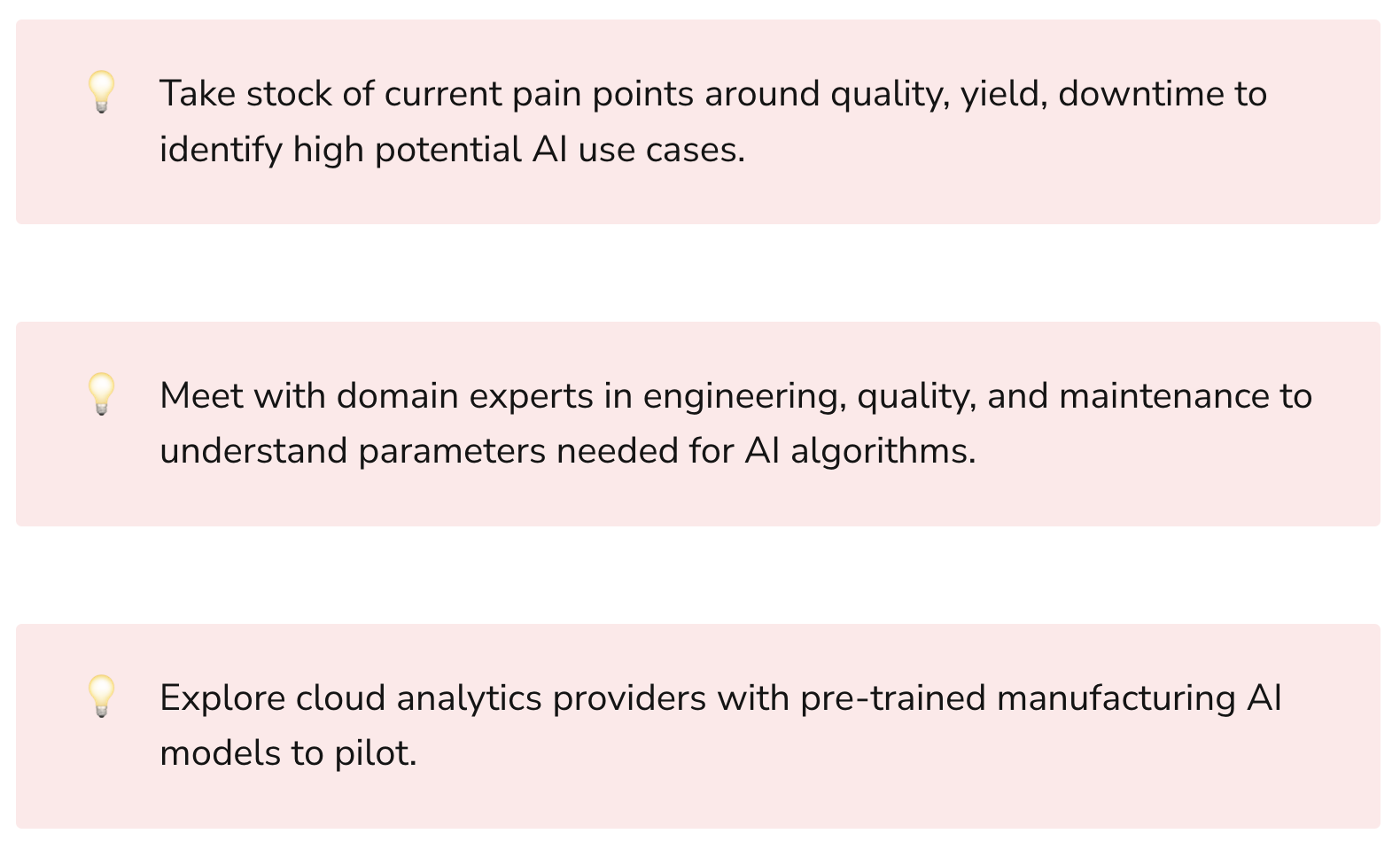

Involve domain experts early - Developing custom AI models requires involving process engineering experts early to contextualize data, define objectives, label datasets, and evaluate model effectiveness. Plan for continuous collaboration through deployment and monitoring.

Start with POCs, not massive projects - Resist the temptation to boil the ocean. Focus initial efforts on AI proofs of concept that target 1-2 high-value use cases. Demonstrate quick time-to-value before committing to multi-year digital transformation initiatives.

Monitor model drift - The accuracy of AI/ML models depends on the data they are trained on. If equipment, processes, or inputs change over time, the models may no longer reflect reality. Continuously monitor model performance on live data and re-train models as needed.

Real-World Examples of AI and Real-time Production Analytics

Global leading manufacturers have already adopted AI and real-time production analytics across use cases:

Early detection of faulty assemblies at an automotive plant

An automotive OEM uses camera-based AI defect detection to analyze thousands of parts per minute as they move down the assembly line. The models identify cracks, holes, and other anomalies in metal chassis parts. By catching defects early, prior to final vehicle assembly, the manufacturer prevents costly rework.

Optimizing an engine assembly line’s model mix decisions

An aerospace turbine manufacturer leverages AI to optimize decisions on sequencing which engine models to run in which order on a common assembly line for maximum yield. The algorithms combine real-time data on past runs, asset availability, environmental conditions and more to support optimization decisions. Outcomes included 6% increased productivity.

Reducing scrap rates through real-time quality optimization

A steel manufacturer uses real-time sensor data from blast furnaces combined with AI to adjust inputs and processing parameters. The predictive models optimize temperatures, material feed rates, oxygen levels and more to minimize quality defects. Scrap rates dropped 12% saving millions annually.

Cutting downtime through AI-powered predictive maintenance

A plastics manufacturer avoids asset failures and downtime by having AI algorithms continuously monitor sensor data from molding presses and ovens. The models predict failure up to 2 weeks in advance allowing ample time for maintenance planning without impacting production schedules.

These examples showcase the breadth of value AI can deliver for industrial companies by effectively leveraging sensor data from across the factory floor.

Key Takeaways

With the influx of real-time data from IIoT, manufacturers have new opportunities to optimize. By taking advantage of modern storage, real-time processing, and AI in the cloud, manufacturers can uncover insights that significantly improve productivity, quality, and yield.

Getting started does not require massive upfront investments. Target quick-win applications via focused proofs of concept.

1. What types of data do manufacturers require for AI and real-time analytics?

Manufacturers need high-frequency time-series data from sensors and connected equipment on the production line. This includes readings like temperature, pressure, flow rate, vibration, voltage, or current over time from critical assets. Images from quality inspection cameras are also valuable for defect analysis. Beyond equipment data, manufacturers also need contextual data from Manufacturing Execution Systems (MES) related to cycle times, yield, final test outcomes etc. to serve as labels.

2. How much data is required to train AI models?

The data needs vary based on the AI/ML technique used. Simple regression models may only need a few hundred labeled examples whereas deep neural networks require vast volumes - hundreds of thousands to millions of labeled data points in some cases. The key is working with experts to identify the minimum viable data thresholds for different use cases and facilitating the data collection pipelines and annotation workflows needed to support model development.

3. What are some common challenges with leveraging sensor data & how can they be mitigated?

Lack of common data formats, ad hoc unreliable data flows, and inadequate data governance are common challenges manufacturers face. The best practice is developing centralized data lake architecture with validated ingestion pipelines, metadata management, quality checks, and governance framework for production sensor data. Containerization & orchestration can help scale & manage pipelines.

4. How quickly can AI models provide insights with real-time data?

With structured streams of sensor data feeding validated pipelines, AI models built for low-latency scoring such as online neural networks can output predictions, classifications, or anomalies within milliseconds. This enables timely closed loop actions or alerts based on results.

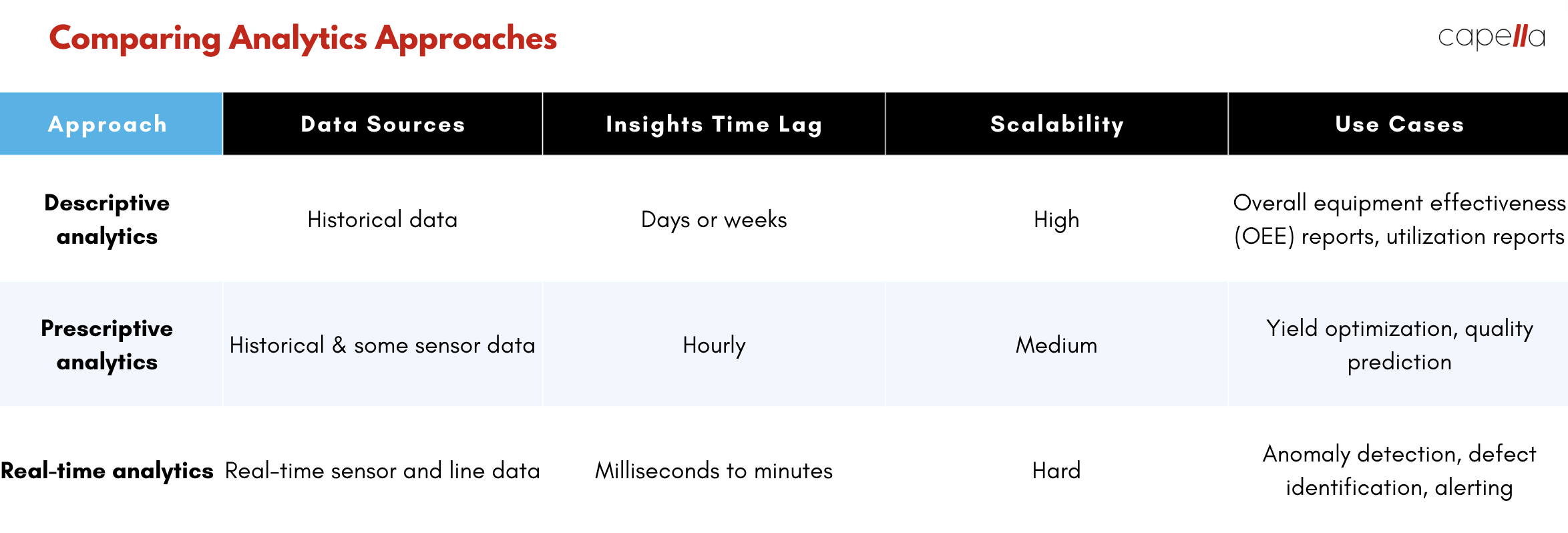

5. How do you select the right analytics technique for different applications?

It depends on the business objective and available data. Problems with historical supervised examples lend themselves to techniques like regression, random forests etc. Unsupervised learning suits exploring unseen patterns over time. Optimization tasks may warrant reinforcement learning approaches through digital twins. Discuss requirements with analytics architects to match analytics approaches accordingly.

6. Where should manufacturers start on their AI & analytics journey?

The best practice is to start with a focused pilot project addressing a single high-impact use case like reducing scrap. Mature pre-production pipelines from sensors to cloud storage with reliable data flows before layering on analytics capabilities. Then implement either off-the-shelf AI models or low-code platforms for custom models targeted to the defined use case rather than taking a broad approach.

7. What are the typical steps for developing custom AI models?

Key steps involve: 1) Data collection of relevant parameters 2) Ingestion & labeling of model target variables 3) Training test & validation data splits 4) Iteratively testing model architectures like neural networks 5) Evaluating performance metrics on held-out test data 6) Deploying the validated model to score real-time production data.

8. What infrastructure is required to operationalize real-time analytics?

Key technology ingredients include: Industrial IoT sensors & data acquisition devices, reliable connectivity & standard data formats, scalable message queues like Kafka, time-series databases like InfluxDB for temporal data, distributed stream processors, container orchestration platforms like Kubernetes, and serverless platforms for deploying analytics microservices.

9. How can manufacturers ensure reliability of real-time analytics applications?

To ensure robust real-time analytics, have automated CI/CD pipelines for testing & deployment. Infrastructure should follow cloud-native principles leveraging containers & microservices so it can quickly scale up under loads without crashing. Have monitoring & logs in place to track application health & data streams. Design modular components to isolate failures & fallbacks. Implement model re-training, A/B testing etc. for updating analytics logic.

10. What are some key metrics manufacturers should track with AI/ML projects?

Relevant key performance indicators include: a) Data pipeline ingestion rates b) Model accuracy metrics on test datasets c) Precision & recall specific to target defect d) Prediction or classification latency e) Operational metrics improvements from using analytics like yield, downtime etc. Ongoing tracking provides insights on data quality, model drift when processes change, and business impact.

Rasheed Rabata

Is a solution and ROI-driven CTO, consultant, and system integrator with experience in deploying data integrations, Data Hubs, Master Data Management, Data Quality, and Data Warehousing solutions. He has a passion for solving complex data problems. His career experience showcases his drive to deliver software and timely solutions for business needs.